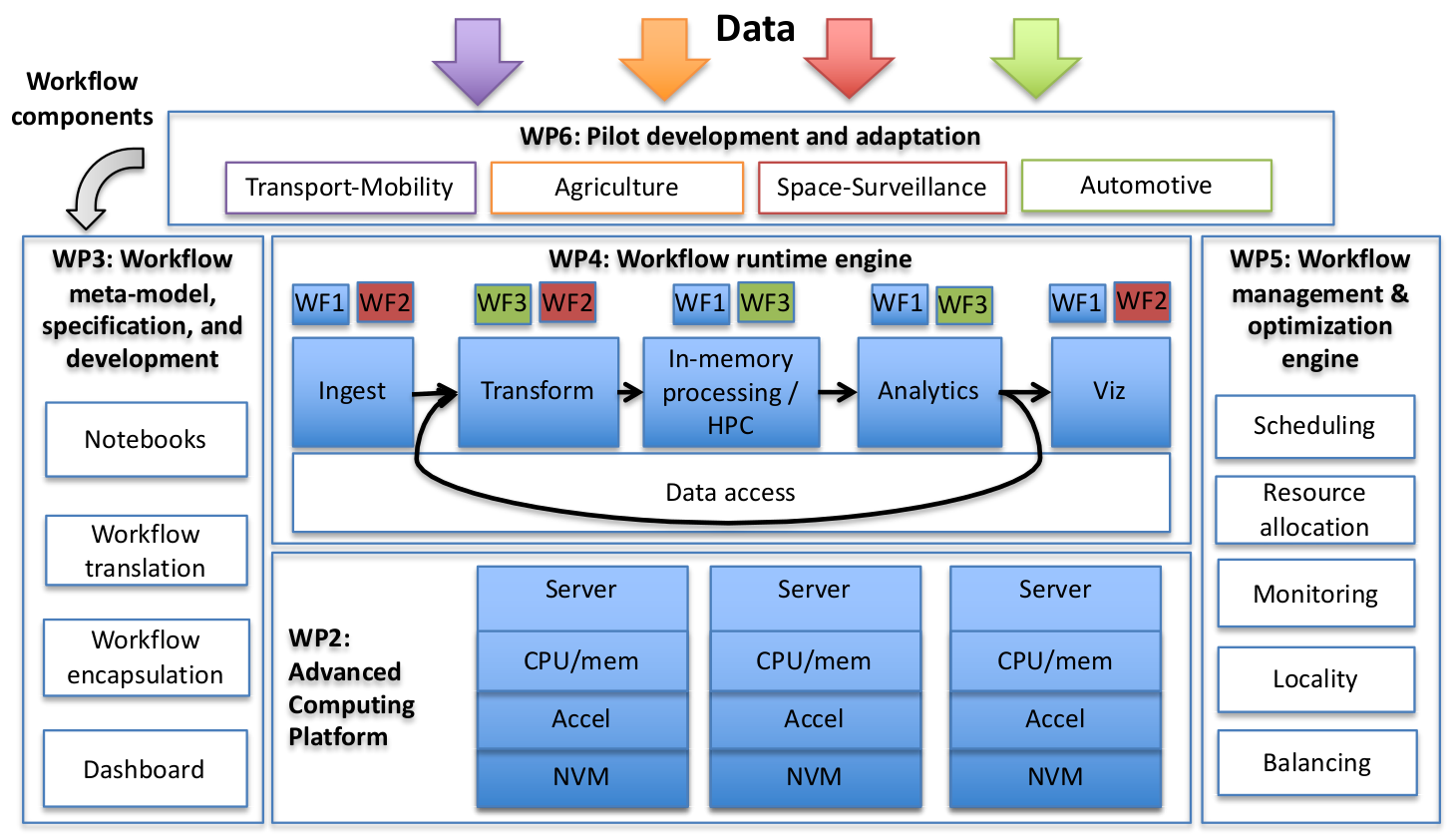

Creating new data-intensive services in terms of dataset size and data processing is an onerous and costly process that requires deep expertise.

It requires high performance beyond what commodity systems can achieve, describing business logic typically by writing applications code, complex software stacks that are hard to deploy and maintain, and the need to use dedicated, per application, testbeds for achieving the desired performance levels. However, most organizations today lack these resources and the associated expertise.

EVOLVE is addressing these issues as it offers new HPC-enabled capabilities in data analytics for processing massive and demanding datasets without requiring extensive IT expertise. It aims to build a large-scale testbed by integrating technology from HPC, Big Data and Cloud fields. This advanced HPC-enabled testbed, is able to process unprecedented dataset sizes and to deal with heavy computation, while allowing shared, secure, and easy deployment, access, and use.